This article is the second in our three-part series about new features in FileMaker Pro 2025. Catch the other articles here:

- Part 1: New Non-AI Features

- Part 3: Server, AI, Security, WebDirect, and more

Claris first dipped its toes into AI features last year. FileMaker Pro 2024 (Version 21) brought semantic search features, such as Insert Embedding, Insert Embedding in Found Set, and Perform Semantic Find. These functions let developers turn text and images into numeric representations called embeddings that can be used to search for records with a similar meaning, versus relying on keyword matching.

FileMaker 2024 also provided support for additional model providers, including Cohere, and other enhancements, such as saving search results to JSON and trimming long log messages.

FileMaker Pro 2025 (v22) builds on the existing AI features, adding functionality to the Script workspace. Developers can now:

- ask AI questions in plain language,

- keep sensitive documents in a private knowledge base, and

- train small, purpose-built models.

What’s actually new in FileMaker 2025

Here’s a list of all of the new AI features in FileMaker 2025. (For more details, review the FileMaker release notes.)

| Script Functions | Explanation |

| Perform SQL Query by Natural Language | Enter a request, for example, “List invoices over $10K this quarter.” FileMaker will ask the AI model to write the SQL query, run it, and return the response—all without sending data outside your system. |

| Perform Find by Natural Language | Converts a sentence (e.g., “Customers in Oregon who bought product X”) into a standard Find request and shows the results. |

| Generate Response from Model | Sends a prompt to a large language model (LLM) and gets back a text answer. “Agentic” mode lets the model call tools—like the steps above—when it needs more data. |

| Configure Prompt Template | Stores reusable prompt wording so every developer doesn’t have to reinvent it. |

| Configure RAG Account / Perform RAG Action | Builds and queries a retrieval-augmented generation (RAG) space. Think of this as a personal searchable library; the model cites real passages instead of guessing. |

| Fine-Tune Model | Teaches a base model new tricks using your data, but in a lightweight way called LoRA, which runs on FileMaker Server. |

| Save Records as JSONL | Exports records one-per-line—exactly the format Fine-Tune Model expects. |

| Configure Regression Model | Trains or loads a simple prediction model you can query with the new PredictFromModel function. |

New AI functions

These functions give developers low-level controls for debugging and vector math.

- GetFieldsOnLayout

- NormalizeEmbedding

- AddEmbeddings

- SubtractEmbeddings

- PredictFromModel

- GetRAGSpaceInfo

The Configure AI Account step now recognizes Anthropic as a provider, widening your options beyond OpenAI and Cohere.

How these additions help in the real world

Not all new features are created equal. Some are merely enhancements or bug fixes, while others bring truly new functionality for practical real-world impact. Here are a few:

- Ask questions, skip the query language. Staff no longer need to know how to write SQL or understand FileMaker’s Find syntax. They can extract insights simply by asking questions. For example, a manager can type “Show top-selling products this month” and get results immediately.

- Keep private data private. Retrieval-augmented generation (RAG) lets developers load internal PDFs, policies, or manuals into a local knowledge space. When users ask questions, the answer is built from those documents—reducing hallucinations and keeping content in-house.

- Customize the model to your industry. With Fine-Tune Model, a medical clinic can train the AI on its procedure notes, improving accuracy on domain-specific terms without sending records to a cloud vendor.

- Fast predictions from your own data. Embeddings can be paired with Configure Regression Model directly in a script step to forecast numbers, for example, the expected delivery time from a customer chat. No external machine-learning stack needed.

- Reuse and share prompts. Configure Prompt Template allows a single, well-crafted prompt to be referenced across scripts, promoting consistency and making audits easier.

Experimenting with Perform Find by Natural Language

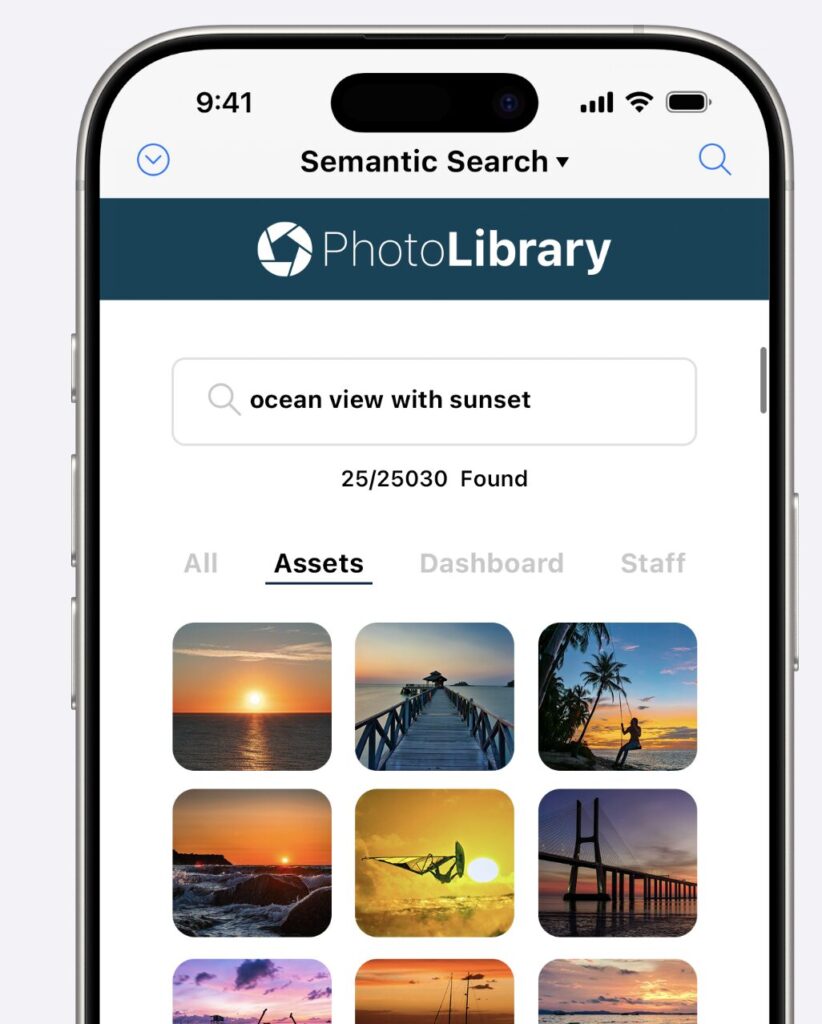

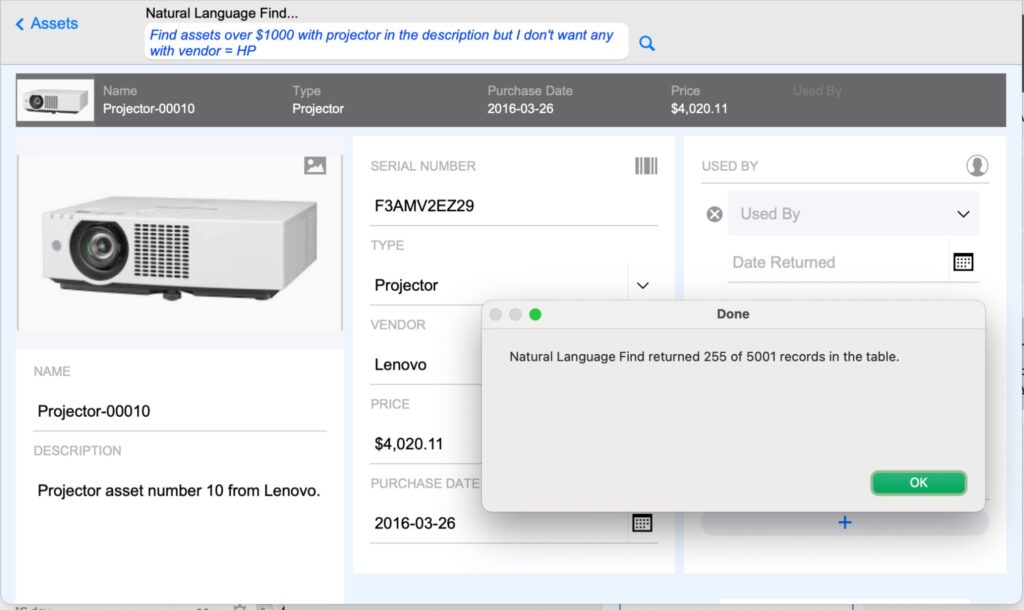

One of the most immediately useful additions in FileMaker Pro 2025 is the new Perform Find by Natural Language script step. This step allows developers to replace the traditional Quick Find field at the top of a layout with a more intuitive input—one where users can type a natural language query, such as “Show me all customers from Oregon who purchased in the last 30 days.”

The real value here is user empowerment: complex search logic can now be executed without requiring users to understand Find mode, omit requests, or use special operators. Instead, they describe what they want in plain English, and FileMaker translates that into a standard Find request.

There are two important implementation details worth highlighting:

- No record data is sent to the AI model. Only the user’s query string is submitted. The model returns one or more Find requests, which FileMaker then executes locally—just like any other Find. You can also press Ctrl+R (or Command+R on a Mac) to access the Modify Find command and inspect or edit the query that was generated. This means your data never leaves your FileMaker solution, which is an important consideration for security and compliance.

- Token usage is minimal. Very few tokens are used because only the user’s natural language query is sent (not any field or record data). As a result, this function can be used freely across large datasets and high-traffic layouts without generating significant API costs.

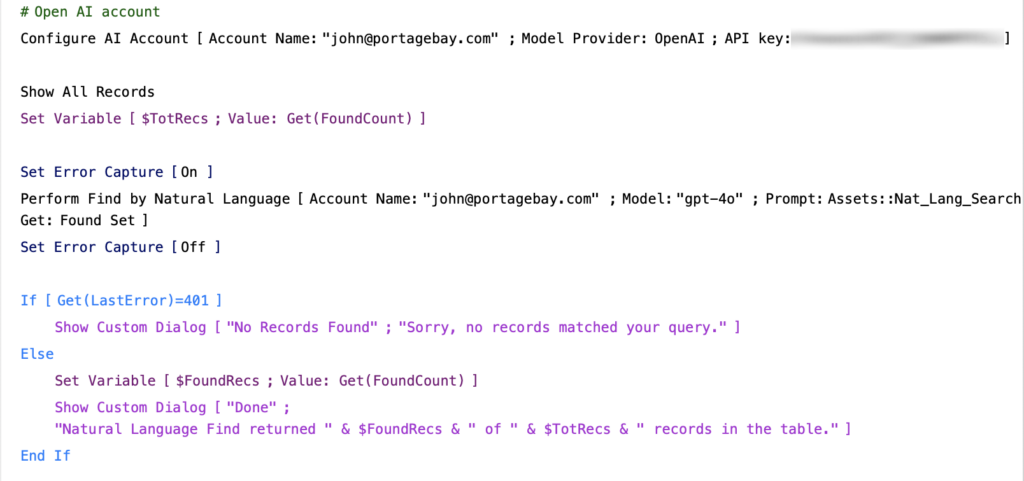

In our testing, we used our OpenAI account. The first minor issue we encountered was with model naming: OpenAI requires the model to be specified exactly as documented—for example, “gpt-4o” in all lowercase. Our initial attempt used uppercase, which caused the call to fail. To test at a moderate scale, we generated 5,000 sample records using ChatGPT and imported them into the blank Assets starter template. We added a global field to a list layout and connected a simple script to invoke the Perform Find by Natural Language step using the value of that global.

The second issue we ran into was an insufficient token budget on our OpenAI account. After topping up the account, the feature worked smoothly, and we were able to run a variety of natural language queries successfully.

Some advantages of FileMaker native AI

- One security model. Existing privilege sets and encryption in FileMaker extend to these AI calls.

- No plug-Ins to update. Everything ships from Claris, signed and documented.

- Performance by design. Calls that only need the schema or an embedding vector keep full record data on-premise, trimming latency and risk.

- Single source of truth. Prompts, templates, and AI logic live in standard scripts where any developer can read and control them.

Caveats and considerations

While the new features are impressive and bring significant AI capabilities to FileMaker, there are still some things to bear in mind.

- You still need an AI account. OpenAI, Anthropic, or another supported provider must be configured before any call will run.

- Prompt design is development work. Clear wording, thorough testing, and logging remain essential.

- Governance first. Even with RAG, decide which documents go into a space and who can query it.

- Server version alignment. Fine-tuning and RAG require FileMaker Server 2025 or the separate AI Model Server. Plan upgrades accordingly.

- Local LLM and server performance. While it’s possible to run a local LLM on your FileMaker Server, performance restraints may lead you to consider a separate server for your LLM for anything other than the lightest testing.

A pilot project is easiest when an experienced developer can keep the scope realistic and ensure prototypes grow into maintainable features.

Developing with confidence

FileMaker Pro 2025 doesn’t promise “AI at the click of a button.” Instead, it extends last year’s groundwork with natural-language querying, private knowledge bases, and fine-tuning—tools that let developers add artificial intelligence where it solves real problems.

At Portage Bay Solutions, we’re already helping prototype these ideas:

- RAG spaces for policy manuals,

- find-by-sentence search screens, and

- lightweight prediction engines.

If you’d like an AI readiness review or a hands-on sprint, let’s talk. We can put FileMaker’s growing AI toolkit to work for your business—securely, responsibly, and on the timelines your business demands.

About the author

John Newhoff’s business background and years of database design, computer and network configuration, and troubleshooting experience allow him to see beyond the immediate problem to the long-term solution. John is the business manager of Portage Bay and our lead 4th Dimension developer, creating sophisticated cross-platform solutions.

This piece represents a collaboration between the human authors and AI technologies, which assisted in both drafting and refinement. The authors maintain full responsibility for the final content.