At Claris Engage 2025, our very own Xandon Frogget hosted a session on Leveraging Local LLMs. In order to bring this information to as many people as possible, Xandon has rerecorded his session and we’ve put it on our YouTube channel.

Following is a brief overview of Xandon’s presentation where he discusses the process, use cases, and recommended models such as Gemma 3, LLaMA 3, DeepSeek, and QwQ. Catch the YouTube video for more in-depth details.

Why Should You Choose Local LLMs?

Xandon: The answer comes down to four core advantages.

- Enhanced security. Your data stays on your hardware, drastically reducing the risk of breaches.

- Cost efficiency. You can say goodbye to those unpredictable usage fees and embrace a more manageable and often lower total cost.

- Complete data control. You’re in charge, and you decide how your data is used and who has access.

- Improved performance. Local processing means lower latency and reliable operation regardless of the internet connectivity.

These are the four pillars from the foundation of why local LLMs are a game changer for FileMaker development.

What Cool Things Can You Do With a Local LLM?

1. Output information from an ingredient label into HTML

Xandon: I took a photo of a package of cheese, and asked the LLM, “What can you tell me about this cheese and what does it pair well with?” So, just based off of that image, the LLM is going to take that information and do some pretty cool stuff with it.

I converted its output into HTML, and we can see all of the information it pulled from the label, including the ingredients and price, and the LLM was able to determine the cheese’s origin, flavor profile as well as wine and fruits that pair well. For example, drizzling honey on the cheese enhances the flavor. And all this is coming back from the LLM based on the information that it got from the label and combining it with information it was trained on from other sources.

2. Create a tutor to assist with homework

Another example is asking the LLM to solve an algebra equation from an image of my kid’s homework. The LLM doesn’t need this in a typed out format. Using only the image, the LLM is able to interpret the equation and output HTML showing step by step how to solve this equation. So it not only gives us the answer, but it also provides a breakdown to understand the steps required to solve the problem.

3. Transcribe information from an image

Another way you can use LLMs is to transcribe information from an image. We used a photo of a package of chocolate and asked the LLM to transcribe it, convert it to JSON, and then create HTML. The LLM found the tagline, nutritional information, ingredients, manufacturer, and barcode. It also added some additional things, such as clickable links to the manufacturer’s website.

How Do Cloud-Based AI Options Compare to Local LLMs?

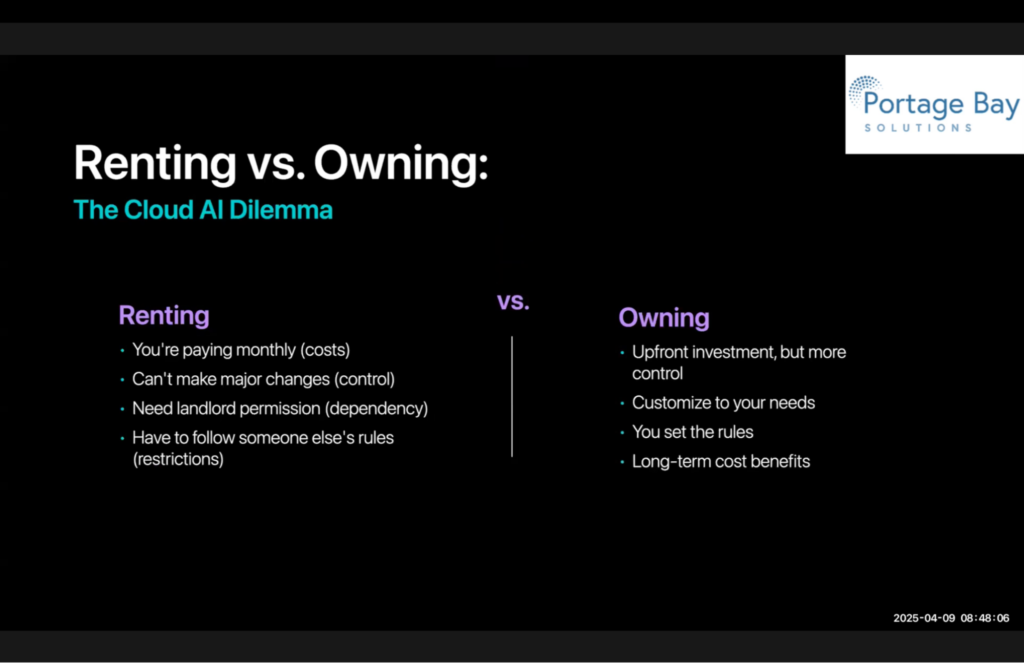

Xandon: Think of cloud AI like renting–you have monthly payments. You can’t make major changes. You don’t have control. You have to ask the landlord’s permission, which is a dependency, anytime you need to do something, and you’re always following someone else’s rules. These are restrictions that you have.

In contrast, a local LLM is like owning–you do have an upfront investment. But you can customize it to your needs. You set the rules, and you have long-term cost benefits.

Why Should Businesses Be Interested in a Local LLM?

Companies need AI out of necessity, but there are barriers. They find it expensive and risky, and they worry about security. With things like vendor lock-in, they feel trapped. Creating a company-level LLM resolves some of those issues and provides direct analysis of your own data.

What Are Some Ways to Manage Access and Use?

Xandon: We can add users to an account, just like we have an admin account, and control their access. They can use the system just like ChatGPT, and ask questions of whatever LLMs that we want to provide for them, and we have full control over that. We can create a group and give the permissions to the group.

We can do all sorts of settings to control different access and what models they have access to, as well as what they can and can’t do. So we can really make sure that they’re working within whatever the parameters are for our company, to make sure we’re in alignment with our policies.

We can also control the models that they have access to, so we can assign the group that has access to the model.

If I use my own family as an example, I pay for various LLM accounts. However, I can’t share them with my family members. With a local LLM, I can set up separate users, groups, and permissions to control, for example, what my kids can access. I can limit them to a specific model, control their interaction, as well as see their chat history, and put some real strong guardrails.

I don’t want my kids using it to complete their homework. I want it to act as a tutor and help them learn.

With a local LLM, I can also select multiple models and compare the results. You can add as many models as you want into that list and get the best results when you’re trying to debug which model should I use for trying to solve problems in your FileMaker solution.

How Do You Set Up a Local LLM?

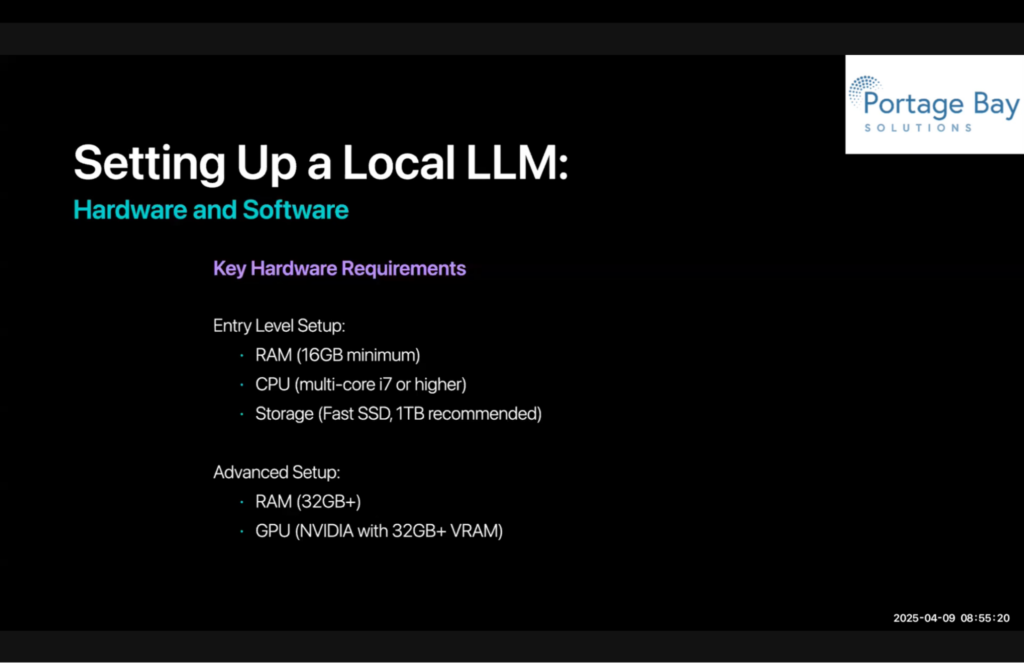

Xandon: For an entry-level setup, you’ll need at least 16 GB of RAM, a good multicore CPU, and a fast SSD. For more advanced solutions, the bare minimum is 32 GB of RAM and a powerful Nvidia GPU. And if you have an M series Macintosh, you want to have plenty of RAM for that chip, as well. As an example, the 70 billion parameter LLaMa 3 requires 32 to 64 GM of RAM to run efficiently.

Which LLM Models Do You Recommend?

Xandon:

- Gemma 3 – Google’s open-source, multilingual, image-ready model.

- LLaMA 3 – Powerful and flexible.

- DeepSeek-V1, QwQ – Great for reasoning.

Any Final Thoughts?

Xandon: Local LLMs provide a powerful way to integrate advanced, private AI into FileMaker. It’s more straightforward than you think, and the impact is undeniable. I encourage you to explore the resources and join community groups.